Contrary to popular belief, forensic comparison evidence, such as fingerprint evidence, are tasks completed by a human not a computer. For over 100 years, fingerprint experts have been able to testify in a court of law that two prints match to the exclusion of all other people, without any empirical evidence supporting the validity and reliability of their techniques. To provide support for their claims of individualisation, experts typically refer to the uniqueness and permanence of fingerprints – that is, that no two people share the same fingerprint; however, errors are not due to people having identical fingerprints, errors are due to examiners incorrectly matching non-matching prints.

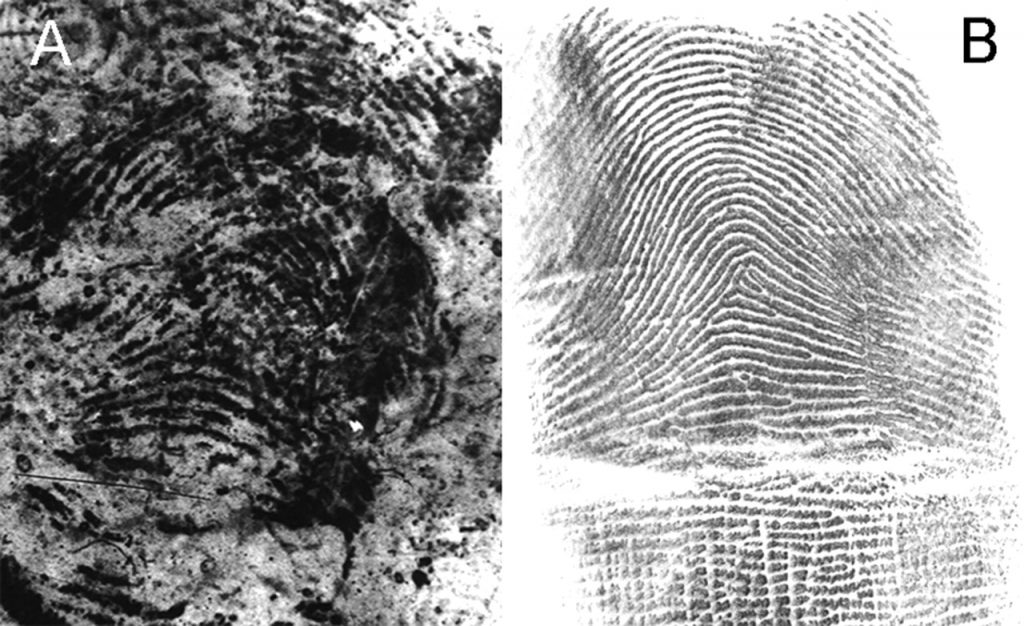

One of the most high profile fingerprint misidentification cases is that of Brandon Mayfield, an American lawyer who was wrongly accused of carrying out the Madrid train bombings in 2004. A fingerprint found on a bag of detonators (see A below) was wrongly attributed to Mayfield’s print (see B below) which was in the FBI’s database because of his prior military service. Three FBI fingerprint experts concluded that the two prints were a “match”. Two weeks after Mayfield was arrested, the Spanish National Police informed the FBI that it had identified an Algerian man, Daoud Ouhnane, as the source of the fingerprint. The FBI then withdrew their identification of Mayfield and released him from custody.

[Source: Problem Idents, onin.com/fp/problemidents.html#madrid]

Misidentifications like Mayfield’s happen far more often than you think. Saks and Koehler (2005) looked at 83 exoneration cases and found that forensic science testing errors and false/misleading forensic testimony occurred in 63% and 27% of cases, respectively. Over fifty people in this study alone were falsely convicted and incarcerated based on errors made during forensic analysis. In light of these wrongful convictions, the U.S. National Academy of Sciences issued a scathing report calling for funding to determine the accuracy and error rates of forensic science techniques (National Research Council, 2009). The report also raised concerns about the language forensic experts use when testifying in court, stating that it is unclear how jurors interpret (and if they understand) certain types of testimony.

My PhD research takes a cognitive and social psychology approach to tackle the question: how should forensic experts communicate their findings in court so that fact-finders can accurately evaluate the evidence?

Humans are notoriously bad at understanding and reasoning with probabilities and statistics (Gigerenzer & Edwards, 2003; Kahneman, Slovic, & Tversky; 1982) and have the tendency to overweight small probabilities and underweight large probabilities (Kahneman & Tversky, 1979). Despite this, governing bodies in forensic science still insist on presenting evidence in the form of random match probabilities or likelihood ratios for the forensic comparison at hand. Random match probabilities and likelihood ratios attempt to quantify the likelihood of an error for a specific comparison, which is problematic because in any specific case, ground truth simply cannot be known (i.e., whether the evidence actually does or does not belong to the defendant). These methods of presenting forensic testimony are also problematic because they focus on the methodology of the forensic technique and ignore the role of the human examiner in forensic decision-making. Simon Cole (2005) illustrates the interconnectedness of fingerprint methodology and human judgment quite nicely in this analogy:

“there is no methodology without a practitioner any more than there is an automobile without a driver, and claiming to have an error rate without a practitioner is akin to calculating the crash rate of an automobile provided it is not driven” (Cole, 2005).

As each stage of fingerprint comparison requires human judgment, a “methodological error rate” – which random match probabilities and likelihood ratios provide – is inherently unhelpful. Instead, I propose that forensic testimony should focus on the accuracy of examiners who perform the forensic technique, rather than presenting information about the methodology (e.g., uniqueness and permanence for fingerprints as previously mentioned). I suspect that providing fact-finders with general information about how fingerprint examiners perform will make it easier for them to understand and interpret evidence presented in a specific case. In line with dual process models (Petty & Caccioppo, 1986; Tversky & Kahneman, 1974), improving the ease of understanding forensic testimony may mean that fact-finders are more resilient against peripheral cues or factors that may (but shouldn’t!) influence their evaluation of the testimony – for example, whether the expert appears to be confident, likeable, attractive, or whether their gender is congruent with the domain of their expertise.

If you’re interested in learning more about forensic science in the courtroom, please feel free to contact me at gianni.ribeiro@uqconnect.edu.au.

References:

Cole, S. A. (2005). More than zero: Accounting for error in latent fingerprint identification. The Journal of Law and Criminology, 95(3), 985-1078.

Gigerenzer, G., & Edwards, A. (2003). Simple tools for understanding risks: From innumeracy to insight. British Medical Journal, 327(7417), 741-744. Doi: 10.1136/bmj.327.7417.741

Kahneman, D., & Tversky, A. (1979). Prospect theory: An analysis of decision under risk. Econometrica, 47(2), 263-291.

Kahneman, D., Slovic, P., & Tversky, A. (1982). Judgment under uncertainty: heuristics and biases. New York, NY: Cambridge University Press.

National Research Council (2009). Strengthening forensic science in the United states: A path forward. Washington, DC: The National Academies Press.

Petty, R. E., & Caccioppo, J. T. (1986). The elaboration likelihood model of persuasion. Advances in Experimental Social Psychology, 19(1), 123-205.

Saks, M. J., & Koehler, J. J. (2005). The coming paradigm shift in forensic identification science. Science, 309(5736), 892-895. Doi: 10.1126/science.1111565

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185(4157), 1124-1131.